Imagine an ecommerce company selling pool noodles worldwide. The sales team, located in the US, works with dashboards that aggregate revenue and profit per product and product category. This team expects currency in USD. Meanwhile, the marketing team running ads on Google is located in Europe. They also rely on revenue and profit data; however, they expect currency in EUR. This problem is often referred to as "currency localization", and in this article, I'll demonstrate how to approach it in a BigQuery data warehouse (DWH) in Google Cloud Platform (GCP).

Thoughts on Converting Currency

Converting units can be as easy as applying a conversion factor conv.

For instance, a kilometer is 1000 meters, and conv = 1000 if I want to convert kilometers into meters - in an equation, that's m = conv * km.

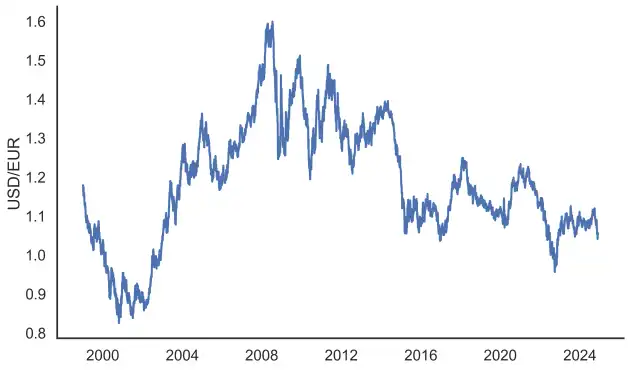

Currency conversion follows a similar principle, but with a crucial difference: the conversion factor, known as the foreign exchange (fx) rate, isn't constant over time.

For example, at some point in 2009, 1 EUR was worth 1.5 USD, but today (November 2024), 1 EUR is roughly worth 1.1 USD.

In the formula, this means that EUR = fx(t) * USD.

To convert between currencies, we need a specific point in time t for (i) the fx-rate and (ii) the transcation (payment).

So, if someone buys a pool noodle on 2024-10-30 at 16:00 in USD and we want to know that value in EUR, we need the fx-rate on 2024-10-30 at 16:00.

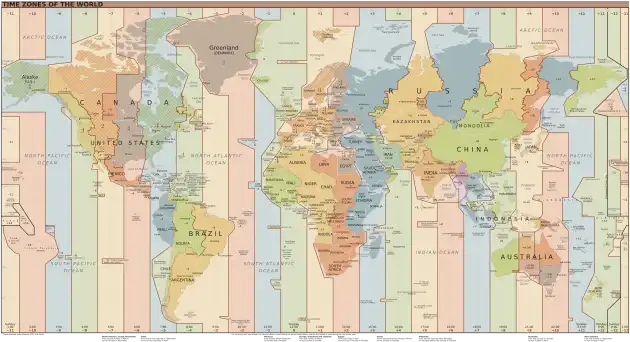

However, the complexity doesn't stop there: a buyer's location and the time zone in which the exchange rate is recorded might differ. This means the specific point in time t itself depends on the time zone tz, requiring us to consider fx(t(tz)).

On top of this, the way in which fx rates are determined involves a lot of nuance. Currency is publicly traded, and the market's fx rates change in real time.

To make this complexity manageable, we'll make the simplifying assumption that daily aggregated exchange rates are sufficient. For example, consider the format in which they are released by the European Central Bank (ECB) on workdays1.

Thoughts on Time Zones

The ECB releases data for fx-rates every workday at around 16:00 CET that represent the effective exchange rates of the same day at 14:10CET.2 Returning to the earlier example: if someone buys a pool noodle on 2024-10-30 at 16h00 in Germany, the ECB's fx rate from 2024-10-30 is applicable. But if they buy the pool noodle on 2024-10-30 at 15:00 in Hawaii, we'll need to convert time zones.

Standard practice in data warehousing is to convert from local time to UTC, and then query dates in UTC. For example, a transaction that takes place in Hawaii at 2024-10-09 15:00 converts to 2024-10-10 01:00 UTC, meaning that the next day's FX rate would be required for a correct conversion. It's easy to go wrong here, soconverting all transaction times to UTC is a sound strategy for coherent results.

Table Design - Wide Table

I recommend two table designs to support multiple currencies: either by joining transactions with a table of FX rates or by storing many currencies in a wide table.

In the wide table approach, a transaction and its values in different currencies are stored in a single row. This results in many columns, making the table "wide". Analytical (OLAP) databases, such as BigQuery, are optimized for supporting tables with thousands of columns without negative performance implications. This makes it feasible to move the currency conversion logic into the application layer that writes data into the wide table. For instance, on Google Cloud Platform, imagine a Pub/Sub message queue containing the original transaction, and Cloud Run workers converting currencies and writing them into the wide table. That wide table might look as follows:

| id | description | date_utc | date_local | date_local_tz | amount | currency | usd | eur | sek | nok | ... |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Noodle | 2023-12-31 23:59:59 | 2024-01-01 00:59:59 +0100 | Europe/Berlin | 900.00 | eur | 1000.00 | 900.00 | 8500.00 | 8000.00 | ... |

| 7 | Noodle | 2024-01-15 10:00:00 | 2024-01-15 11:00:00 +0100 | Pacific/Honolulu | 45.00 | eur | 50.00 | 45.00 | 425.00 | 400.00 | ... |

Selecting the right currency from this table is trivial by writing some SQL:

SELECT id, description, date_local, usd FROM wide_tableTable Design - Joining Tables

A wide table design might be a poor choice for a transactional (OLTP) database. OLTP databases store records row-wise in memory, leading to inefficient compression of the stored data, as well as diminished performance when running queries that access and aggregate large amounts of data.

A straightforward solution in this scenario is the creation of a table fb_rates that contains FX rates, and joining transactions with that table to derive the localized currency value.

For this purpose, fx_rates might look as follows:

| date_utc | fx_from | fx_to | fx_rate |

|---|---|---|---|

| 2024-10-09 | EUR | USD | 1.0962 |

| 2024-10-09 | EUR | GBP | ... |

| ... | ... | ... | ... |

It is important to note that FX rates have direction, which is represented by the fx_from and fx_to columns. In the context of fx_rates, one unit of the fx_from currency converts into an amount of fx_rate in the fx_to currency on day date_utc.

Assume the transactions table looks as follows:

| id | description | transaction_time | amount | currency |

|---|---|---|---|---|

| 1 | Blue Noodle | 2024-10-09 12:00:00 Europe/Berlin | 1000.00 | EUR |

| 7 | Yellow Noodle | 2024-10-09 15:00:00 Pacific/Honolulu | 50.00 | EUR |

| ... | ... | ... | ... | ... |

We can then write an SQL query that converts the transaction's amount into any desired currency. Here is a sample query that performs the conversion in BigQuery SQL:

SELECT

t.id,

t.description,

fx.fx_rate,

t.amount AS amount_eur,

t.amount * fx.fx_rate AS amount_usd,

DATETIME(t.transaction_time) as t_date_utc,

FROM transactions AS t

LEFT JOIN fx_rates AS fx

ON fx.date_utc = EXTRACT(DATE FROM TIMESTAMP(t.transaction_time))The crucial part of this query is the EXTRACT(DATE FROM TIMESTAMP(t.transaction_time)), which converts the timezone-aware transaction timestamp to an UTC date, allowing us to join it with the daily exchange rate in the fx_rates table.

Note that for the query to work, it is essential that the transaction_time values in the transactions table contain timezone information.

Wrapping it up

Those are two approaches I recommend for solving currency localization. It's also possible to mix the two approaches - in a data warehouse, one could join tables (second approach) to create the wide table design I recommend in the first approach.

Broadly speaking, a wide table facilitates simple querying and keeps complexity low - all the relevant data is stored in one table, and all calculations are performed outside the database. The approach that joins two tables allows engineers to use the database to perform the currency conversion, eliminating the dependency on external transformations. However, this solution is harder to maintain, and it's easier to make a mistake.